Analog vs. Digital Clipping

Ideally, we want the acoustic energy produced by musicians to be captured, stored and reproduced with complete integrity. One of the definitions of fidelity is “the degree of exactness with which something is copied or reproduced”. The key word in this definition is “exactness”. A perfect reproduction or something with absolute fidelity will be indistinguishable from the original. That’s what we want with regards to the music that we enjoy, right?

So if there’s any kind of distortion between the source original and the reproduced version, fidelity suffers. We saw yesterday that recording systems have limits. They are restricted with regards to frequency range and dynamics. Clipping is an amplitude distortion. It happens when an incoming signal can’t be handled by the electronics or storage medium. In the case of analog tape headroom is +12 dB. If a signal exceeds that amount, the output will be something less than expected.

Analog electronics can still output a signal but it will be “distorted” from the ideal signal. Analog is far more forgiving in how it distorts than digital. The output waveforms are not ideal but they are at least “rounded”. In fact, analog distortion is used creatively by musicians and recording engineers all the time.

Virtually all commercial audio releases dating before the 80s were recorded using analog tape. This was before digital production tools were available for professionals and prior to digital distribution formats such as the compact disc. This means that every digital downloadable track from before 1980 had to go through an analog to digital conversion. It doesn’t matter whether the downloaded track comes from iTunes, Rhapsody or Yahoo…some engineer had to set the output level of an analog tape machine AND the input level of the ADC (analog to digital converter) in order to make the initial digital conversion.

Unlike analog clipping that smoothly transitions from the desired waveform to a distorted version of it, nothing about digital clipping is gentle or smooth. Digital amplitudes are represented by discrete numeric values. In PCM encoded signals, the number of available values is defined by the number of bits in each digital word (the actual number is calculated by raising the number 2 to the power of the number of bits in the word. For example, an 8-bit digital word can have at most 256 unique values or 2 * 2 * 2 * 2 * 2 * 2 * 2 * 2 or 2 to the 8th power).

Unlike voltages in a wire, digital values beyond the maximum number are undefined…there is simply no going past the ceiling. Whereas tubes or other analog electronics can output values higher than their max (although they are distorted), digital outputs a perfectly flat plateau. That’s why it’s extremely important that the original transfer from the master tape to PCM or DSD digital be done correctly.

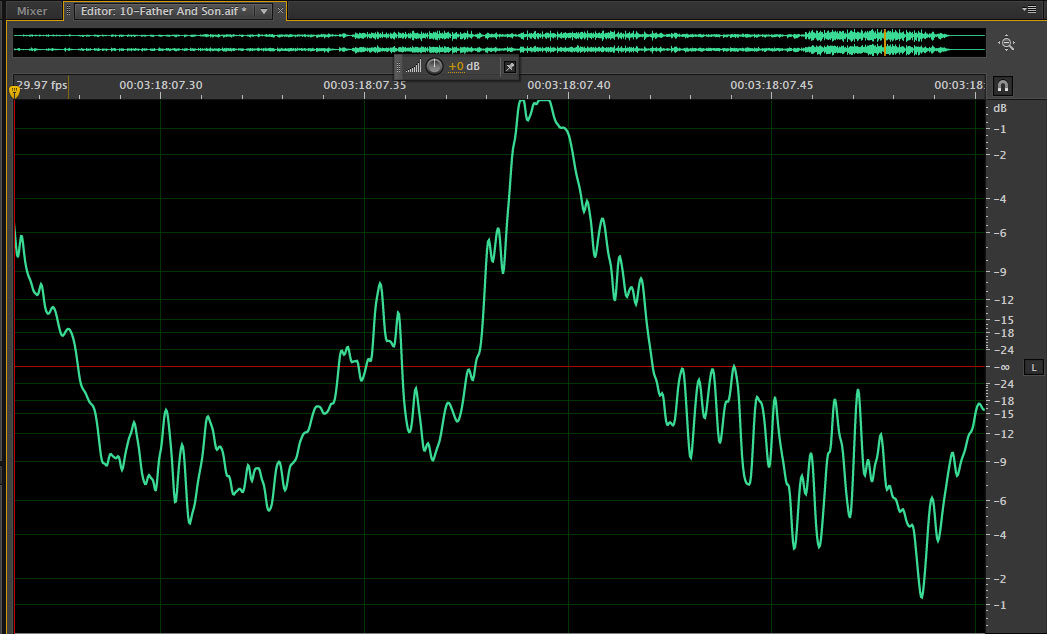

Figure 1 – A waveform from the HDtracks 96 kHz/24-bit download of “Father and Son” by Cat Stevens showing clipping [Click to enlarge].

The files that I downloaded from HDtracks the other day had clipped samples in them. The first tune I checked, “Father and Son”, sounds really good. I certainly didn’t here any audible distortion. However when run through a digital application that checks for clipped samples, sure enough the loudest moments in the track were clipped. It happened in the 96 kHz/24-bit version AND the 192 kHz download. This means that it most likely happened during the original transfer OR during the new mastering that was done by Ted Jensen while he worked on the digital transfer. I can’t honestly understand why the files weren’t checked prior to being uploaded to the servers or why they haven’t been replaced.

When consumer purchase expensive “master quality” files, every assurance should be made that they have done correctly. In this case, something went wrong.

And there’s other interesting things about the tracks from “Tea for the Tillerman”. More on those items tomorrow.

Maybe it is necessary a deeper analysis of the audio source to know if this clipping is a bad or good thing.

I’m not a expert in audio, but have a little understanding of signal processing. So correct me if I’m wrong.

When you will do an analog to digital conversion you must define a sample rate (192KHz in this case), a bit depth (24 bits) and the signal amplitude. This last item you will define the resolution (each step of the digital values represent X Volts of the analog signal) of your digital signal.

Suppose your signal is 99% between -1V and +1V and in the remaining 1% it reach +-1,3V. Or you clip this 1% or you compromise the resolution of all digital signal.

If you consider +-1V as amplitude, using a bit depth of 24bits, the resolution will be 11,92uV. Using +-1,3V it will be 15,50uV.

Is it better lost 30% in the resolution in the all signal because this 1% over 1V, or is better compromise the resolution of all signal and not clip this 1%?