Directional Hearing

Human hearing is an amazing sense. Our ear/brain apparatus can accommodate a range of dynamic levels that extends from the quietest sounds (the sound of our own heartbeat and nervous system in an anechoic chamber) to the roar of an F-16 blasting off the deck of an aircraft carrier with full afterburners (not recommended without hearing protection). This range is billions of multiples in terms of the energy delivered to our eardrums. No other sense comes close. And the frequency response, while typically 20 Hz to 20 kHz may just depend on higher ultrasonic frequencies to fully contain a musical or natural sound or the interplay of ultrasonic partials or overtones.

But what about directionality? How is that some clever signal processing can change our perception of where a sound comes from? The Headphones[xi]™ tracks that I posted to the FTP site a couple of days ago clearly demonstrate the dramatic difference between a traditional stereo mix played through headphones and one that has been “processed” to expand the listening experience to involve a “modeled” physical space.

So today I present a short primer on “directional hearing”. I’ll continue with an explanation of how production techniques and post processors can maximize immersive listening…even through a set of headphones.

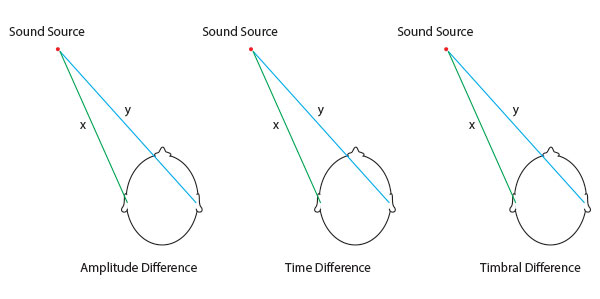

There are three primary factors that contribute to directional hearing. The first is the difference in amplitude delivered to our brains by our two ears. Sound amplitude diminishes by a formula called the “inverse square rule” (I wrote about this some months back and included a graphic that illustrates the concept…to reread that post, you can click here.) Because our ears are located some 5 inches apart and are not “coincident” (meaning they don’t occupy the same point in space), any sounds that are not directly in front or behind us will be heard as different amplitudes by our ears. See figure 1 below:

Figure 1 – A bird’s eye view of an off axis sound source and the factors that cause us to experience the sound to the left of center.

In addition to the amplitude difference between our left and right ears for a sound in front and to the left of center, there is a timing difference between our ears as well. Sound travels at about 1160 feet per second at sea level. For a sound coming from the front left, it takes slightly less time for the sound to reach our left ear than it does to reach our right ear. It might be a very small difference but our ears and brain can detect the difference and make us experience the sound in the right place.

Finally, there is a timbral difference between our ears. The pathway to the left ear is not blocked by our thick head and is received filtered by the outer ear or pinna. The other side loses some of the high frequency information because the right ear is “shadowed” by our head. There are subtle timbral differences between the direct sound and filtered sound that contribute to our ability to hear directionally.

These three factors contribute to directional hearing depending on the frequencies being heard. Our directional hearing is better in the middle frequency range than at the lower ranges. And different amplitudes also change our perceptions.

What’s the essential difference between this technology and ambisonics?

Boy, that’s a bigger answer than will fit in a quick reply. I’ll talk about ambisonics in a full post soon.

Sorry. I meant to post my question on the Headphone Surround thread.

Of more interest to me is how headphones, directly firing information into our (flattened, depending on the headphones) ears can fool our brain into hearing surround sound given that there can be no use of our ear shape which, I would assume, our brain also uses to determine spatial coordinates of sound.

“The pathway to the left ear is not blocked by our thick head” – you speak for yourself Doc! ;-)))

Interesting reading.

I was.

I have now listened to some of the headphone mixes and I have to say the results are very interesting. But let me say first that I did these tests on my computer, albeit with a 24-bit capable sound-card, and fairly naff (read that as very naff) headphones (cans).

I could definitely hear the difference, the sound is no longer in the centre of my head, so the premise definitely works. But I kept hearing a shift in the sound, like the performer(s) were drastically changing their position, is this part of the dem – ie switching back and forward A-B testing?

I am in the process of buying a new set of cans suitable for my main system (in quality terms) and a final verdict must wait till then, but I have to say there is definitely something very good here. Without a doubt this is worth pursuing and I think is very exciting.

Cheers Doc.

I think I should have read your post more carefully Doc. I have a pair of Beyer cans for mobile use, they ain’t a lotta cop but much better than the rubbish ones on my computer and with them on my NAIM streamer it is very obvious when you A-B the sound. Sorry about that!

Anyway the results are now astonishing, in fact so astonishing that I need convincing that it is not April 1st today! With the treated files my little Beyer cans sound as if I had spent a lot more money on them than I did – A LOT MORE. Going back to the untreated files on some of the tracks was shocking. Honestly I do not know where to start here, I really don’t.

I tried a little test myself. I have been listening to the great Terry Riley’s ‘In C’ by ‘Bang on a Can’ a lot recently. This haunting piece of music is something I have played on and off for years lately and I have been playing it a lot lately. So I listened to on my Beyer cans and it sounded pretty dead but after switching to speakers it became alive again. This was basically what I was hearing in your recordings.

What is interesting, though, is that technically you are distorting the signal and yet it sounds better!

btw is there any chance that someone is making a box that we put between our cans & amp and do what your box of tricks did?

The Smyth Room Realiser by Smyth Research does this trick…and on a personalized basis. I can put you in touch, if you’re interested.

I have just read the following about the Smyth Research Room Realiser:

“The Realiser package includes all needed accessories and a pair of STAX electrostatic headphones.”

So they bung in a pair of Stax headphone and for all under $500 – NOT I think!

Remember Doc I have got to get it past ‘She who must be obeyed’.

The price of the Realiser is not under $500…it is just over $3000, I believe. I can pass your information along to the local expert and sales person, if you’re seriously interested in the device. And remember this is lot less expensive than a good amplifier and set of speakers!

The Smyth Research device is a program with dedicated hardware. In my opinion, the product that should be sold as a DSP with an operating system. Plugins could be added to the DSP via USB or SD. Maybe receivers that have built in DSP’s should be upgradable. I buy new components every five to ten years. Between those times, you could sell me plugins that I can keep until I find better ones.

There are several plugin versions of headphone processors. I’ll write the Darin Fong and Headphones 3D apps soon.