Super Live Audio: Part II

The engineers at KV2 are concerned with producing high SPLs without distortion in a hall or large concert venue. They don’t have to record and then playback music because they design and deliver live sound. But they do want to process the sound in the digital domain in order to minimize distortion of various kinds and maintain very high timing accuracy.

The traditional analog signal path from microphones and direct boxes into an analog console and then on to an array of amplifiers and speakers can deliver amplified music and vocals very accurately. In fact, most live concert PA systems have purely analog signal paths but are under the control of digital recall and automation systems. So why would KV2 be so proud of their “new format”, which depends on converting the analog output signals into DSD (at 20 MHZ or 7 times faster than 2.8224 MHz).

The ideal system keeps the analog signals…the microphones and other instrumentals plugged directly into the console…in the analog domain. The correct place for digital technology is in the control of various analog parameters such as switch positions, fader levels, panning, mutes, EQ etc. A modern concert is a preprogrammed set of digital cues that configure the console for each tune in turn. The balancing of levels and “timbres” happens during the sound check and before.

The KV2 website endorses a three fold description of audio. The paragraph from their piece reads:

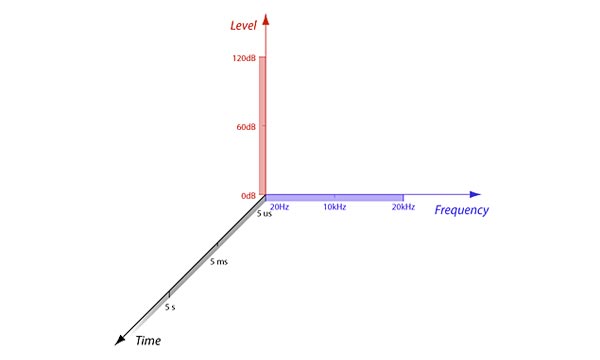

Sound is a three dimensional object consisting of three primary parameters, these are:

1 – The level

2 – The frequency

3 – Time

The common hearing range of the human ear is from 0 to 120 dB of the signal level, the frequency range is from 20Hz to 20kHz, but it is often neglected to recognize the importance of resolution in time. Human hearing is able to recognize time definition, (the difference in incoming sound), up to 10μs,however the latest research has found, that it is even less (5μs).

They include the following illustration:

Figure 1 – A graphic from KV2 audio showing their “definition of sound”.

It’s interesting to see how they define sound. The opening sentence, “The common hearing range of the human ear is from 0 to 120 dB of the signal level”, which besides being a little stilted with regards to the English language is inaccurate. Human hearing can perceive a very wide range of amplitudes. Traditionally, the maximum amplitude that can be “heard” without causing pain and hearing damage is 130 dB SPL…that means it’s referenced to actual energy in the air. We’ve talked about this as dynamic range because we want to maximize the difference or relationship between the quietest sound and the loudest sounds in a selection of recorded music. These days DACs can deliver up to 130 dB of range but very few recordings deliver that much…virtually none. And the range at a live concert is much, much less. Even at home or in a studio, numbers above 80-90 are rare.

They cite the 20-20 kHz frequency range, which has been the traditional range used in most references. Only recently have their been indications that ultrasonics may play a role in human hearing.

Then there’s the “often neglected…importance of resolution in time”, according to KV2. “Human hearing is able to recognize time definition (the difference in incoming sound)”, according to their site.

Time in acoustics is about phase relationships. How much acuity do we have when hearing two sounds arriving at different times? It turns out that we’re very good at this…down to 5-10 microseconds. But just how important is the delta in arrivals times and the other aspects of sound?

We hear sounds arriving at different times everyday. The reflections of source sounds off of the floor, the walls, and objects in a space create a myriad of different arrival times. It’s part of ambiance and reverberation etc. Obviously, we want two speakers to deliver sounds with accurate phase but the effects of phase on the actual fidelity of a piece of music are not as great as the frequency or dynamic parameters.

Timing and phase are not equal partners to frequency and amplitude.

I find their arguments to be quite strange and nonsensical.

It sounds like KV2 is going with the myth that a sample rate like 44.1 kHz (or 96 kHz for that matter) is incapable of sufficient “time resolution” down to 5 microseconds. They seem to imply that stuff that happens in between samples is not captured. This myth seems to be cropping up more and more often–at least I’m seeing it more frequently in the last year or so. But it is a myth, and the sound that happens between samples is captured perfectly by 24 bits and a sample rate of 96 kHz.

Not related to topic but taken by surprise

I was reading a well-respected magazine this afternoon and I was surprised to encounter the following sentence:

“In addition, we have improved the sound quality of our Misa Criolla by up-conversion of the original CD rip to a resolution of 192 kHz/32- bit with apodization and dither applied using iZotope RX-3 Advanced.” — Charles Zeilig, Ph.D., and Jay Clawson (TAS January 2015)

Have I missed something?

These are the same guys that did the research on FLAC etc. I’ll take a look at the article…but am very doubtful about any fidelity change. They may have changed the sound of the piece and like it better but it’s never going to be high-resolution.

For those who understand that digital systems are about more than amplitude and frequency response, it’s not at all hard to believe they improved the sound. Many of the better DACs around have apodizing filters. These remove the pre-ringing that is present with typical finite impulse response filters. Upsampling, and implementing an apodizing filter would allow much of the same benefit to be heard on DACs with standard FIR filters, because the pre-ringing introduced at 192 kHz is shorter, and so, probably less, or not at all, audible.

It may not be hard to imagine that they “changed” the sound but they most certainly did not improve the sound as you state. The fidelity of a recording is established at the time of the original source recording. I will be addressing the development, use, and effectiveness of apodizing filters. The notion that upsampling a CD specification recording to 192 kHz/24-bits using digital processing would improve its fidelity is wishful thinking. The fidelity will remain as it was at 44.1 kHz/16 bits…the sound may change and be more euphonious to those that want to tweak things away from the mastered sound, but at what cost in terms of space and bandwidth?

They cannot improve the bandwidth or dynamic range – no argument there –, but think about it this way: The signal is whatever it is, but you still have to play it back. Using a finite impulse response filter adds both pre-and post ringing. That ringing does not exist within the digital data, it is only created upon reconstruction into an analog waveform. In apodizing filter shifts all of the ringing to after the impulse, which is closer to the physical phenomenon of sound generation. So, the processed file is at least as faithful to the original recording as playing it back through a FIR filter. I’ll reiterate that the processing described is simply a way to let people take advantage of advances in digital filter architecture without changing their hardware.

I don’t subscribe to that magazine, so I can only go off the quote in the above post. We could, and probably would, argue round and round about what constitutes an improvement in fidelity, but an improvement in sound can only be evaluated by listening.

I’m in complete agreement with you on this. We do want to get the very best reproduction from whatever files and format is captured. And using apodizing filters does maximize the accuracy of the playback.

Not to worry, Paul McGowan at PS Audio already has the “Product of the Year” Direct Stream DAC which converts everything imputed to DSD output. He’ll save us all whether we want it or not.

I saw that announcement and I know that’s he’s honestly proud of the recognition. Oh well.

I believe there are studies showing that humans are not very good at detecting differences in relative phase, but do you have a source for saying that timing is less important than amplitude and frequency, or is that just your opinion? I would guess the timing differences we can most easily detect are those at the beginnings of sounds, which is different from relative phase of steady-state sine waves.

Here are some articles relevant to the topic:

http://boson.physics.sc.edu/~kunchur/Acoustics-papers.htm

http://phys.org/news/2013-02-human-fourier-uncertainty-principle.html

The “timing” issues that were discussed in the article focused on phase differentiation. And yes, there are studies that have shown that humans are poor at perceiving phase in sine waves or complex sounds. Thanks for the links…I’ll take a look.