Upgrading Fidelity: Fact or Fiction?

Is it possible to “improve” the basic audio fidelity of a recording after the fact? I know audiophiles strive to get every last bit of tone color, spatial distribution, warmth, clarity, and detail out of their sources. We’re constantly on the look out for new and improved components, better cables, accessories, and acoustic treatments, but is there really an “Absolute Sound” as many believe? Or do we exist in a world full of fidelity variations that provide sonic bliss to some and irritation to others. The continual rants about the merits of expensive cables, consumer formats, speaker types, DACs, and audio accessories ignore the most fundamental fact of music recording and reproduction — the innate sound of the master as delivered by the replication facility. The fidelity of the master is the peak of the sonic pyramid; there is no besting the sound as distributed by the label. Period.

And we all know there are recordings that sound fabulous and recordings that sound like crap. Sadly, the percentage of bad recordings to good ones is way too high IMHO. I find it very difficult to listen to most contemporary commercial releases due to over compressed sound, the reliance on virtual rather than real instruments, and the focus on repetitive rhythmic loops in lieu of song craft. Maybe my aesthetics are rooted in musicianship, production creativity, and expressive lyrics to the extreme but I’m less impressed by the new music I hear than I expect to be.

The new generation of artists and producers isn’t really interested in high fidelity — they want hits. I can understand that. So we’re stuck with trying getting our personal “best” fidelity out of the existing masters. And just what constitutes a master. Is it the first incarnation of the album? Or is it perhaps the “remastered” version done by the record company on the 30th anniversary of a successful project? Maybe the Mobile Fidelity version tops them all? I know the mastering guys are busy making new transfers of the analog originals (who know which versions) to high-resolution digital files for HDtracks and the other digital download sites. These same files will ultimately be available as “high-resolution” streams if MQA gets their way and TIDAL buys into their technology.

There are a number of companies — big ones like SONY and small mom and pop ones — claiming to be able to upconvert standard fidelity music to “high-resolution”. I’ve already written about DSEE from Sony (click here for the article). Today, I’d like to take a serious look — and listen — to a process Eilex claims to be able to convert standard-resolution CD fidelity sound to Hi-Res is real-time! They claim it works on any digital source — radios, televisions, CDs, and DVDs.

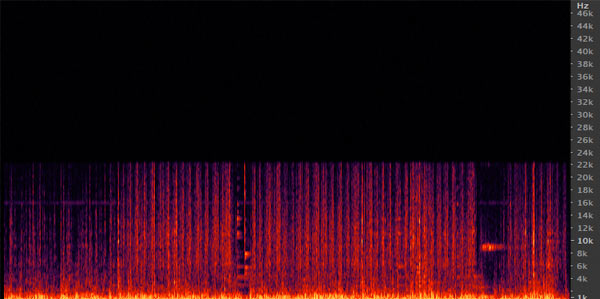

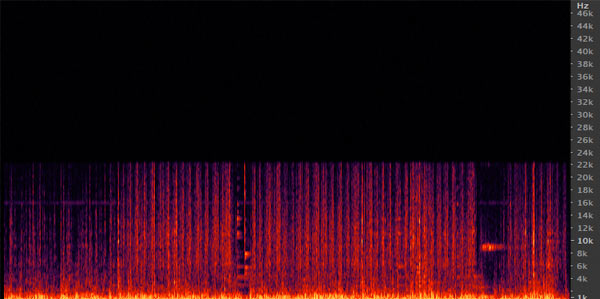

This is not simple sample rate conversion or sample interpolation. Their process “generates multiple even-order harmonics from the audio source” and adds them to the original audio. It’s an interesting idea. We all know that PCM digital files have a maximum high frequency limit due to the sampling rate and the Shannon-Nyquist frequency. Looking at the spectra of a compact disc shows a plateau at just below 22 kHz. The uppermost harmonics are truncated — if they were there to begin with (which is not an unreasonable qualification).

Figure 1 – This is the spectra of a 44.1 CD specification file in a 96 kHz graph. Notice the hard flat line at 22 kHz. This is a high-resolution PCM files from AIX Records called “Mosaic” by Laurence Juber.

Is it possible to cleverly add the missing partials back into a standard-resolution file? I would imagine it is possible to create some new ultrasonic components. But will having them make a track sound better?

To be continued…

I bet it sounds different but whether is it better will depend on the listener. Will be equivalent to adjusting the volume or adding equalization. Very interesting though!

This could have some merit for a limited subset of available music. I’ll write more about it in the next post. However, if they’re doing what I think they’re doing, it won’t be any louder or have any different color or equalization. They are merely synthesizing the missing uppermost octave of harmonics. They do nothing for dynamic range.

So there’s little to no benefit for us “old guys” who can’t hear the top octaves anyway? 😉

It’s not just us old guys…human hearing doesn’t respond in traditional ways above 20 kHz.

Hi-Res is not for hearing the ultrasonic, but for smoother and more natural audible range. If you can enjoy live music, you will enjoy Hi-Res.

A standard-resolution recording at CD specs can be wonderfully smooth and natural. If you’re changing the balance of harmonics, then the resultant files aren’t high-res, they are simply modified CD res recordings. In my own recordings, I find them to be smooth, natural, and highly detailed at all resolutions.

I guess I was implying the difference in an A/B test the difference would be equivalent to boosting volume or specific frequencies to the average listener. It is great they are attempting to innovate though!

Fair enough.

The in-band (audible) frequency response must be kept FLAT. No change in the loudness. (But, to be exact, the newly added ultrasonic information has some energy, which is mere -100dB or below. This information is added to the original waveform in the time domain.

The ultrasonic additions are indeed very low amplitude signals and unlikely to be audible even if produced through super tweeters. If they are that low and beyond the “audible band” then they won’t contribute to fidelity changes.

One thing that really struck me about the recent Soundbreaking series was how little the discussion went to fidelity with regards to the in-studio recording process (though it was focused more on the “production” aspect vs. “engineering” to be fair.) What I tried to take away, though, was that its the idea, the emotion, the response-evoking aspect that artists are most concerned with trying to capture it seems, and so I’m trying to remind myself that really, as long as my listening experience isn’t noticeably detracting from those things then I’m probably in better shape than the masses. Of course, these are all subjectives and immeasurables, so short of having the band/musician/performer come over and listen along and give the thumbs up, we’re all just taking our best shot at it…in my opinion.

I can’t imagine spending more of my time worrying, adjusting, measuring, tweaking, and whatever else to chasing whatever “better” is than just listening and enjoying the music itself. I certainly appreciate the work of the guys out there that are essentially doing just that for the rest of us though which is what brought me here in the first place, so thanks!

Andrew, I think your assessment of the typical production process is spot on. The artists, engineers, and producers are looking for the essential part, instrumental color, harmony, lyric turn, or groove far more than the dynamic range, frequency response, or spatial depth. That’s just the way it is.

Perhaps doing something similar with CDs as RIAA on Vinyl.

The need for RIAA curves is done for different reasons than this process.

It won’t be the original though even if it sounds “better” to some (and certainly not high-definition by any stretch of the imagination). This reminds me of tubes adding second-order harmonic distortion. Maybe I can call that high-res too!

Btw, at the NYAS, the TEAC guy said that their high-end line includes some kind of digital process to “restore MP3s to CD quality.” No idea.

The DSEE process from SONY is another one. There is no way to restore something that’s been removed through encoding processes like MP3. They are lossy and thus throw away some of the source.

CD is a lossy compression media, although it is not as bad as MP3. It limits the frequency response to 22.05KHz and the dynamic range to 96dB. We are trying to recover the lost information in both time and frequency domain.

Your claim that “CD is a lossy compression media” is not accurate and bears no relationship to actual compress formats like AAC, MP3, or others. The “limiting” of the frequency response to 22.050 kHz is no limit at all. That’s the upper frequency of human hearing and the result of the sampling rate — Shannon – Nyquist. The potential dynamic range is theoretically 96 (although dither reduces that to 93 dB) but there are very few commercial recordings that come anywhere close to that level. Most are less than 30-48 dB. The is no information to be recaptured in a CD recording because it is uncompressed audio.

I was trying to express my frustration over the outdated CD format calling it as digitally compressed media. (My apology for not being theoretically correct.) I surely respect the CD format developed 40 years ago managing to store the huge digital data (at that time) using U-matic tapes before PC age. But, it is not optimum media for modern recordings. It is limiting the both frequency and dynamic ranges. New recordings are often made in 96-24 format, but they unfortunately have to reduce them to CD format for distribution. We have DVD media now, which easily takes 48/96-24 data at almost the same cost as CD.

When we check the 96-24 Hi-Res recordings, we see good amount of information over 20KHz even at the level below -96dB. We want to keep them rather than discarding them.

Your position on the CD format needs some clarification. It is not a compressed format as you correctly acknowledge in your reply. And it is an absolutely terrific distribution platform for commercial music. When you say it is limited in both “frequency and dynamic ranges”, that’s true only for audiophile projects. Ultrasonic information (above 20 kHz) can be produced by a wide variety of instruments but is generally regarded as inaudible for humans. Maybe there’s reason to believe that these frequencies have some effect on our perception of music but they clearly are required for music enjoyment. I personally support the inclusion of the ultrasonic octave in the recordings that I produce. If the sound was produced during the session, then it deserves to be captures — and reproduced. Unfortunately, while our source music formats and components can pass these frequencies, our speakers and headphones cannot. So it’s a question of assembling a very high performance system.

As for dynamic range, the same practical constraint rules. Point me to a commercially released recording (non-audiophile) that needs more dynamic range than a CD provides (93 dB accounting for dithering) and I’ll consider the necessity to increase the delivery word length. For professional audio engineers like myself, 24-bit or even 32-bits is a benefit. But the standard record production process doesn’t need or want excessive dynamic range. Most rock/country/pop albums don’t have more than 30-50 dB of dynamic range, which can be captured more far less than 16-bits. Some producers and engineers record at 96 kHz/24-bits but most still use 48 kHz/24-bits. Sadly, they aren’t really interested in high-fidelity. They want a particular sound — sometimes with very limited fidelity.

The myth or hoax of high-resolution audio has been detailed on this site for years. While it is possible to exceed the limitations of CDs, it is not necessary or even desired by the mass music consuming marketplace. All of the marketing hype, acronyms, new fidelity tweaks, formats, increased sample rates/word lengths, and testimonials won’t alter the fact that fidelity is NOT a major factor in the production of hit records.

Let a clarinet play a #A and then an oboe. The harmonic overtones will be different for both instruments. How do you know which harmonics need to be added? Sounds a bit weird to me.

Regards

I don’t think the addition of the ultrasonic octave is dependent on the individual instruments. They must analyze the existing spectra of ALL instruments and calculate what the next octave would be. I’ll be interested in hearing what it can do.

We don’t need to worry about the scale or instruments. We can simply calculate the harmonics (only even order harmonics) from the given signal. The amount of the harmonics and the applied taper towards the higher frequencies are critical.

Why limit your recreation algorithm to even number partials? Instruments (like the clarinet) produce off numbered partials. And everything you generate would have to be in the ultrasonic range because lower frequencies — all of them — are accurately captured using PCM at normal CD specs.

We actually developed a tool to generate all even- and odd-order harmonics. It could even change their polarity and strength individually. After the numerous tests, we found that the even-order harmonics satisfies the purposes. That also allowed us to reduce the MIPS of the software in half. This is very important to be used in the actual products, especially battery operated portables.

By the way, we can use even- and odd-order in-band harmonics generator to improve the sound quality of digitally compressed audio such as MP3 and AAC.

Come on Doc, this is a load of malarky. A fancy digital version of the BBE Sonic Maximizer? Worse really. The harmonics won’t be natural, won’t be the correct harmonics and they are all happening at frequencies humans do not hear. The guys must be desperate.

I’m not so sure I would dismiss this as impossible or without benefit. Sure most of the DSEE and other schemes for upgrading MP3 fidelity are bogus but it is theoretically possible to recreate exactly the right ultrasonics from a frequency limited file. I’m planning on testing them by offering up a 44.1 / 16 version of a HD file and see if their version matches my actual file. I say give them a chance.

There is a limit to what human beings can hear. Each of us has different limits and it changes from time to time for many reasons but usually degrades with age. The softest sound, the loudest sound, the highest frequency, the lowest frequency, and incremental changes of them all have their limits of perception. When you say improve fidelity, exactly what does that mean? The ability to manipulate electrical signals has taken a giant leap forward in the last couple of decades. Powerful tools that are no longer impossibly expensive or take a computer whiz to use are readily available. We’d all like to think our hearing ability is limitless but testing it brings us down to earth. They say were it not for Newton there’d have been no Einstein. And were it not for Fourier there would have been no Shannon and no Nyquist. I don’t know why audiophiles constantly resist what has become bedrock mathematics for scientists and engineers in practically every field for nearly 200 years. “But we don’t listen to sine waves.” No you don’t but you probably won’t understand it until you do the math. Start with a couple of years of calculus and analytic geometry and you’ll be prepared to grind out Fourier transforms, inverse transforms, and understand what insight they impart. Few people who don’t study electrical engineering ever study Fourier but you can’t become an electrical engineer without it. Too bad, it applies to all fields of science and engineering.

So if we can manipulate these signals in loudness and frequency, adding, subtracting, squeezing them, expanding them, changing them any which way we want to, is there anything else that can be done to alter them when they become sound and reach our ears. YES! You can manipulate them in time and space. This is an area I’ve explored for forty years and it was a great deal of fun. Changing these variables in an infinite number of ways can produce drastic perceived results. Some are greatly enhancing. Some are horrible beyond words. You can do this in any number of ways. You can also simultaneously manipulate the signal in many other ways including every other effect such as changing pitch, phasing, flanging, and all of the other things that twist and mold sound. You’ll need different kinds of equipment to do it though. Digital processors, lots more amplifiers speakers, equalizers. One very disturbing thing occurred to me about this new ability….it can potentially be dangerous. I don’t know if you can drive someone insane misusing these techniques and I’ve never been tempted to try to find out. These tools are every bit as powerful as the more usual tools and open up a new universe of possibilities. There are few people exploring them. Just call me Columbus.

Hi everybody. I accidentally found this column discussing about Eilex HD Remaster. My name is Yoshi Asahi. I am the developer of this technology at Eilex. Hi-Res audio is becoming popular recently, but many people have difficulties in understanding it. Well, Hi-Res recording may be easier to understand, but Hi-Res up-conversion from lower level format, like CD, may be confusing. I will reply to the already listed comments one by one. Hope that helps to clarify the questions you may have.

Yoshi, thanks so much for coming by. I’m intrigued by your development and have been considering reaching out to you to see if the “Mosaic” clip from my catalog could be brought back to hi-res status using your process.

Yes, you are welcome sending me test materials you have. If they were originally recorded in Hi-Res and down-converted to CD, I will try to bring them back to near original status.

Yoshi, this might be very informative and open some opportunities for both of us. If you have a Dropbox or FTP site that I can use to send a 44.1 kHz/16-bit files, I’ll make it available asap. I have the original tracks at 96 kHz/24-bits. All of my tracks are not mastered and exhibit very wide dynamic range and frequency response. Thanks for the offer.

Hi Mark, I have submitted a message through this website’s contact form. I look forward to continuing the discussion by email.

Hey Mark, Fellow member of the Detroit diaspora here. I’ve read, enjoyed, and learned a great deal from your blog posts. Haven’t seen any lately and hoped it was due to your book, studio, and teaching commitments and not health issues. Thinking about you. Keep it burning.

Hi Tom. Yes, things have been focused on the book, CES, and holidays. I’ve just returned from a long weekend on the ski slopes of Big Sky, Montana. Stay tuned, we’re getting close.

It seems that discussions of regarding potential benefits of HRA are limited by failing to take account of the perceptual implications of the harmonics within complex sounds. Our current understanding of hearing is based on research with sine wave ‘tones’ (rudimentary trigonometric functions without harmonics).

Even if you were able to have full bandwidth to 20 KHz, end-to-end, throughout the sound recording, editing, encoding and playback chain, this would only capture up to the 2nd harmonic (1st overtone) of a complex 10KHz sound; or the 4th harmonic of a 5 KHz sound, or the 8th harmonic of a 2.5 KHz sound, etc.

Standard digital frequency bandwidth-resolutions up to 20 KHz severely truncate the upper harmonic content of complex sounds, resulting in the loss of many harmonic interactions occurring within real world sounds. One example of the audible implications of harmonic interactions occurs with the perception of simple combination tones. Further, it seems that combination tones are only surface-level examples of a more comprehensive perceptual process.

Unfortunately, without the use of test recordings at full bandwidth resolution, end-to-end, up to 20 KHz (standard) and 40 KHz (high), A/B comparisons have only marginal meaning. In effect, current A/B testing is merely demonstrating the application of a higher sampling rate to standard resolution (20 Hz-20KHz) sounds.

Dolores, thanks for the comments. You’re absolutely correct that partials of high or even moderately high frequencies get truncated in current standard resolution audio. And the also get truncated in the real world. Our ear don’t respond to those ultrasonic frequencies so they don’t really matter unless — as you say — they corrupt audible frequencies. The issue of combination tones is real. I believe in using higher sampling rates for a variety of reasons — it’s not just about capturing ultrasonics.